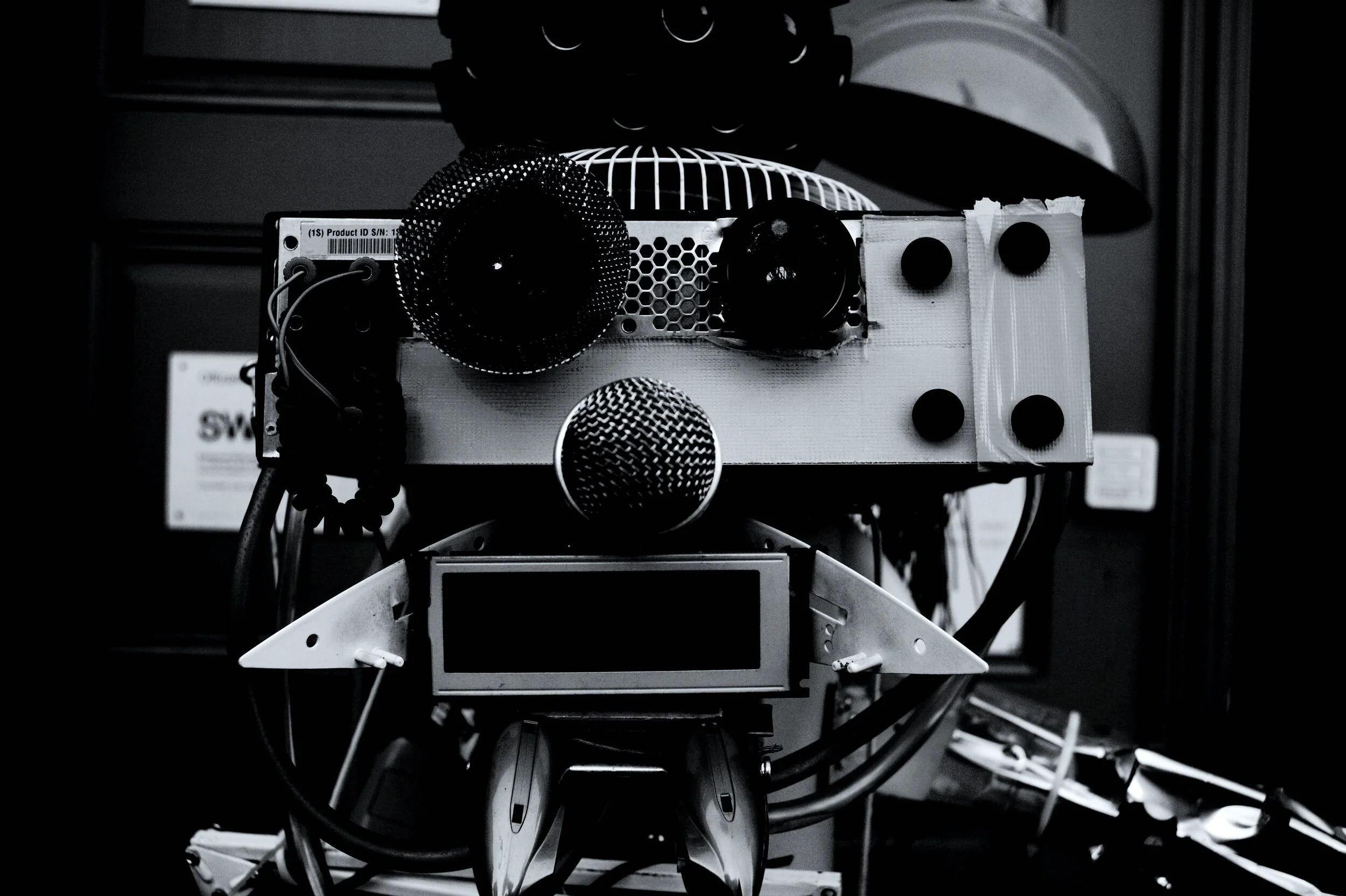

Will A.I. Voices Replace Voice Over Artists?

I read an article (or more likely a paid advertising post) recently that asked “Will Text to Speech Converters win the Voice Over Market?” In it, the author cited the rise in popularity of one particular text-to-speech platform, which is apparently growing at a rate of about 50% per month. The “synthetic” voices are now so good, claimed the article, that they’re good enough for most applications.

But is that really true?

Before I begin, let me remind you that I’m a voiceover artist, so my industry is watching the rise of text-to-speech very closely. It’s certainly hard to argue that the synthetic voices are getting better and better, and can already replace a human voice in some applications, for short form messages. We’re already a long way down the road from those robotic, concatenated text-to-speech voices. And they’re getting better all the time, as neural networks begin to learn more and more about how real humans actually speak.

Breaking off our engagement

The problem that anyone who creates longer form content runs into is loss of engagement. That’s true, whether the script is recorded by a voice talent like me, or by an AI voice. The key difference is that the longer people listen to a machine voice, the more the façade slips. After a couple of minutes, the repeated speech patterns and intonations – what we call “prosody” – begin to give the game away. It’s just not the same as listening to a human being, so people start to tune out – whether that’s on e-learning content, audiobooks, or whatever.

Even the best of the synthetic voices is still a long way off replicating the natural speech patterns, prosody and nuance of a human being. And even a human who isn’t a trained voice talent can’t get the same results as someone who is. After all, that’s how people like me make our living in the first place…

Machines can’t look at the script and intuitively decide which words to lend weight to, which to gloss over, where to insert a cheeky smile… or a pause for effect… and so on. (Hell, the machines aren’t even smart enough to be able to spot a crosswalk or a traffic light in a picture without our help – hence the vexatious “Captcha”.)

The author of that article I mentioned earlier says “If I did not like the timbre of the voice or voice emotions – I change the character, add intonations, pauses, all sorts of um, hm … and so on. It’s done! Work on the dialogue took just 5 minutes and cost a few cents.”

Challenges for UX & Conversation Designers

It sounds great in theory, doesn’t it? But that argument has not one, not two, but three fundamental errors, as I see it:

Firstly, User Experience (UX) and Conversational Designers who work with voice generation will tell you that we don’t yet have a standard vocabulary, let alone an agreed universal markup language, to even describe all of these kinds of nuances. And that’s before we get into variations in speed, pitch, emotion, and the rest of what makes a good voiceover recording. Without that, the script can’t be marked up for a machine to turn into natural speech. Currently, each voice generation platform has its own capabilities, limitations and ways of working, to achieve any variation in the end result. And I’d contend that it certainly takes a lot more than five minutes to get good results.

Secondly, there remains the original problem: if a UX Designer or Conversation Designer is going to have to go through a long script, sentence by sentence, phrase by phrase, word by word, to get to it sounding “almost human”, then that’s a very time-consuming exercise for someone in a very specialised role that’s more akin to a director in a voice over session. It’s probably not something that someone who’s in a hurry and wants to save time and money is going to want to do, or enjoy.

And thirdly – and I think this is really the key point – all of this assumes that you even know which words you want to hit, which phrases you want to lift, and so on. And that’s where your trained voice over actor makes their living in the first place. It’s a very particular skill set, to be able to look at a script and read it so that it sounds perfectly pitched for the “sales read”, the “conversational read”, or whatever read you’re going for. How many people without a background in voiceover production can do that? And that’s before we get into the realm of creating characters, each with their own nuances and speech patterns.

Prices in the voice over market are in flux too. With dubious thanks to the “commoditisation of voiceover” due to online casting, producers can now find a “real voice” at a significantly lower cost than was possible just a few years ago. If the price is right, why bother with all that messing around?

So, will synthetic voices and AI voices replace some of what my voiceover colleagues and I do? Absolutely. It’s already happening in some parts of the industry, like the cheaper end of e-learning and explainer video. But I believe that in the long run we’re going to see a split between projects that are voiced by machines, and projects which will still be voiced by voiceover artists. I’m watching the AI space closely, but I’m not hanging up my headphones any time soon.

The robots are certainly coming (I can see them marching up the valley…) But here’s the thing: you can hear them coming a mile off – and the longer you listen, the more like robots they sound.

(Banner picture by Natasa Grabovac on Unsplash)